Computer networks

Go Back

Index

-

Network Types

-

Topologies

-

IP and MAC addresses

-

Network components

-

Network media

-

Networking evolution

-

Client-server models

-

Network models

-

Services

Network Types

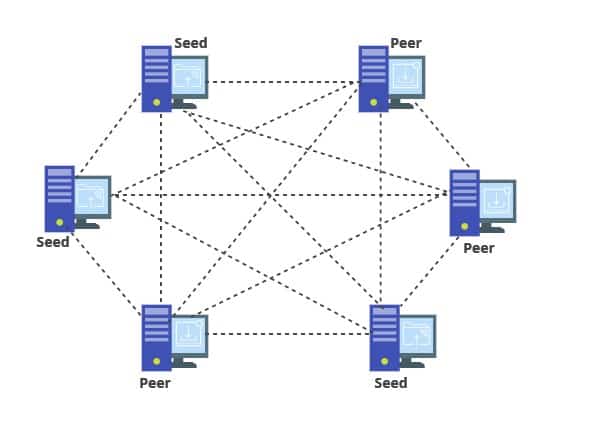

P2P

P2P networks or peer-to-peer are a computer network with interconnected nodes between 2 or more different devices. Historically file sharing programs such as Napster used P2P networks to share files between two different devices which would host those files, since 2002 Napster has no longer been in production and was replaced by other P2P file sharing services such as Soulseek. Another example of peer-to-peer networks is torrenting where someone has a torrent on their system and can share it to others by seeding the torrent, once another person has downloaded the torrent then they are also a seeder of that torrent which means now there are 2 people who are seeding the torrent which means that the network decides who has the faster connection and downloads from them.

Lots of peer-to-peer networks are used to transfer files, and one of the main usages of this is for torrenting copyrighted material which is considered illegal in most countries. In torrenting the way that you can be reported for downloading copyrighted material is a copyright holder will be seeding popular torrents of their domain and whilst they are seeding it means that they can see anyone elses IP address who is downloading that torrent which then means they will send a complaint to the ISP and the ISP can send a complaint to the house that network address comes from. One way people circumvent this is using virtual private networks (VPNs).

PAN

Connects personal devices to each other, the range of a PAN is only a few centimeters to a few meters. Common examples of a PAN network are peripherals connected to a device such as wireless earphones or things such as computer mice. For Bluetooth, they use a master-slave paradigm where the system (PC, for example) is the master whilst the device such as mice or earphones are the slaves.

One of the security risk that comes with PAN is that if one of the devices that connects is unsecure then this could lead to the other devices that it is connected to also getting the same insecurities of the one that is affected.

PAN is a good network for short range connections, in a longer range something else will need to be used since PAN can only work in a short range area.

Alternatives: LAN, WAN

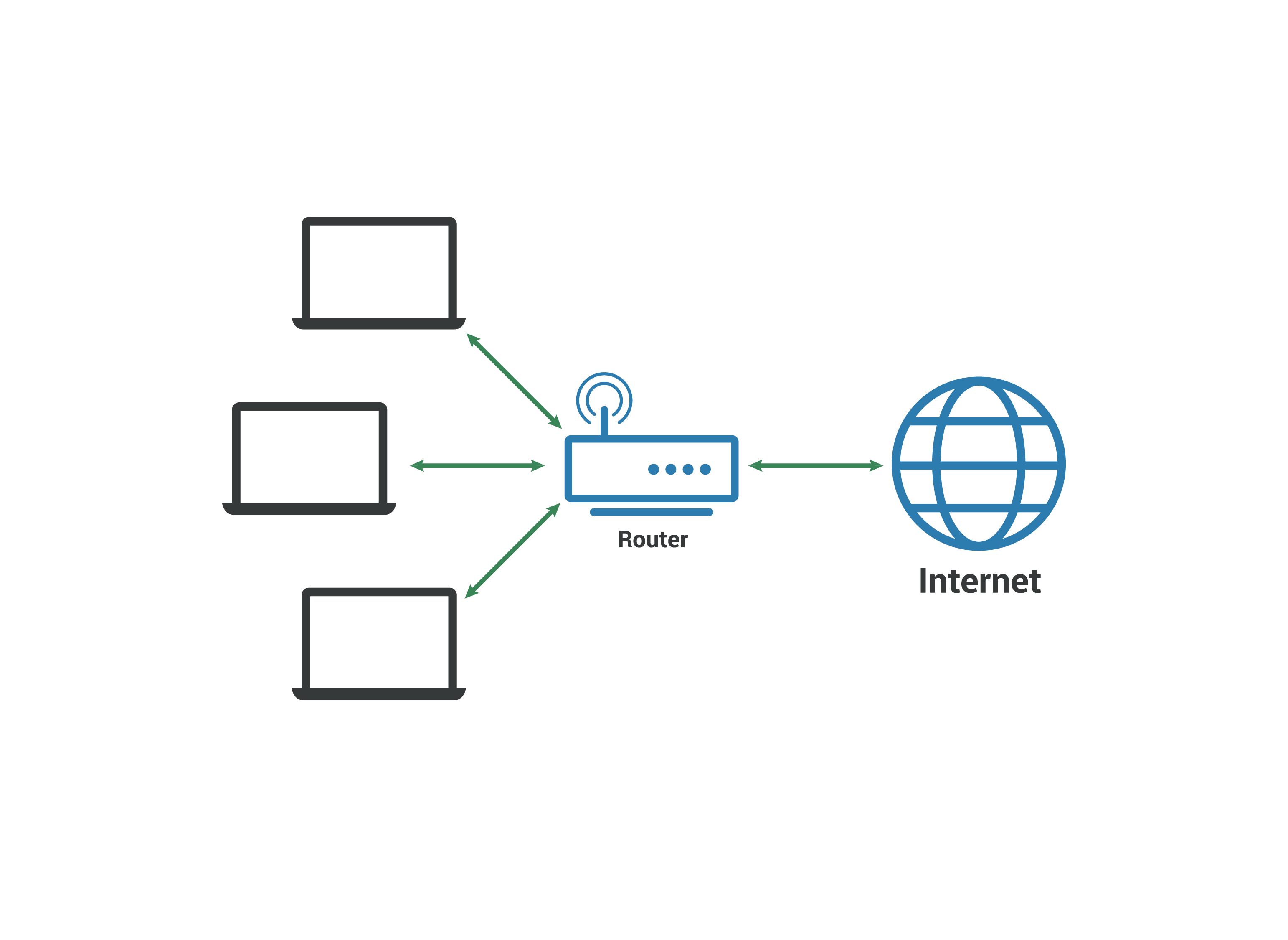

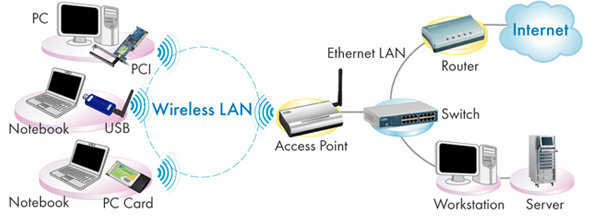

(W)LAN

A LAN is a collection of devices within a private area such as within a single building which all connect to a switch which connects to a router that enables the devices to be able to connect to things such as the internet. A wired connection is through things such as ethernet which connects from the router to the device whilst wireless is things such as Wi-Fi.

If a person goes onto the network usually through logging into the router then they can do malicious things to the network which could lead to lose of an internet connection or getting sensitive data. One way to help with this is changing the default password that comes with routers since the default password is insecure and can be brute forced pretty easily.

A LAN is good on a small network and is quite secure especially compared to PAN.

Alternatives: WAN

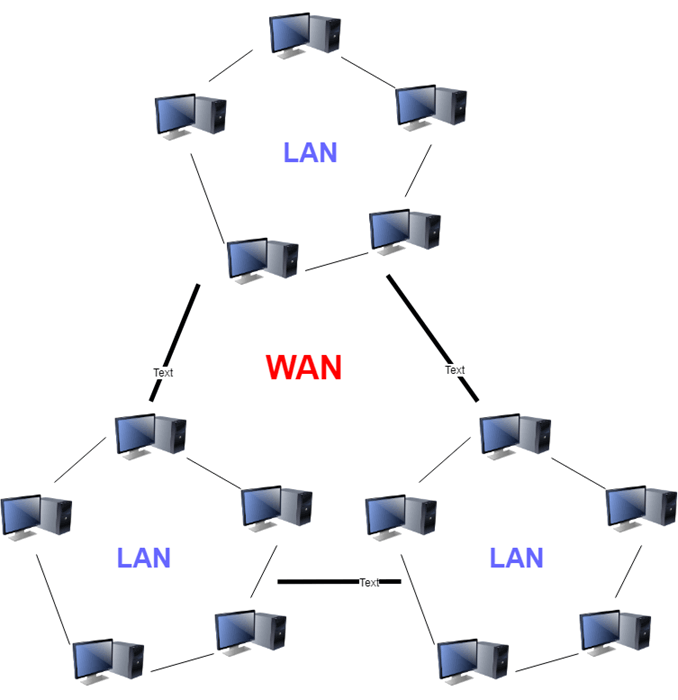

(W)WAN

An unified network compromised of multiple different LANs being able to connect to each other so to interact with each of the other LAN networks. The internet is an example of how WAN works with multiple different LANs connecting to each other to be able interact with each other.

With such a wide scope, WANs can be considered a lot less secure than some others such as LAN and with it being so large that itself brings issues which can ruin the network.

Alternatives: LAN

CAN

A CAN (Campus/Corporate Area Network) is a collection of LANs dedicated to a campus or a corporate, it is larger than a local area network whilst being smaller than a metropolitan area network.

Issues that can arise on a CAN are usually the same as others but with how it will all be connected to a central server that means that any attacks could take a whole campus down or files stolen could be personal work or things such as grades.

Alternatives: WAN

MAN

A MAN (Metropolitan area network) is a network that connects devices and users within a geographical area of a metropolitan city. In MAN systems, things such as free-wifi may be given to users of the network in the metropolitan area such as in New York but it can also be an interconnection of larger companies such as the CERN.

Issues for MAN may arise with how usually for things such as free public-wifi there would be no password encryption to connect to the network.

Alternatives: WAN

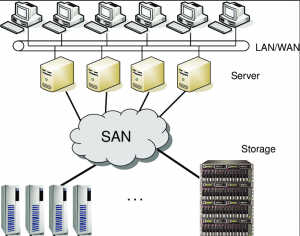

SAN

A SAN (Storage area network) is a dedicated network for data storage, usually SANs consist of a network which has multiple storage devices such as HDDs and SSDs or tape libraries. SANs also allow for automatic backups of the data and monitoring of the storage.

There are lots of threats to a SAN usually relating to the data storage and the issues that could happen to damage to the storage.

Topologies

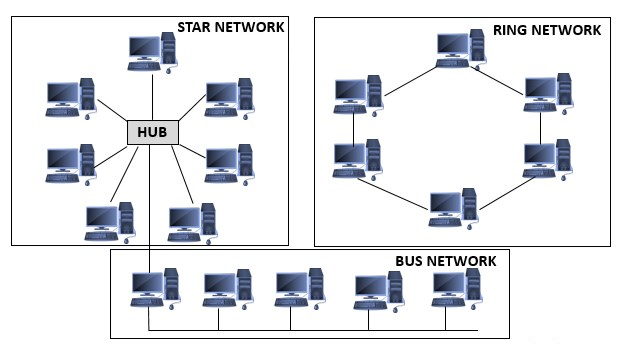

Star

The most popular of network topologies, every individual network device is connected to a central hub. It sends signals from the device to the central hub, which can then connect with the other devices. This star topology is a lot less expensive since it only needs one I/O port, but with more hardware such as hubs and switches it gets more expensive. Star topology is both reliable and not reliable, it is reliable since if one cable fails then that means that only that one device fails. But there is still a single point of failure of with if the central hub goes down then all the devices networks will also go down. The topology is robust in nature, if you can connect a device to a central hub then it will work, there is also no disruptions if you add or remove devices. An issue that could arise is if you have lots of devices that need to be connected to the central hub, then you may need to buy some more hubs or switches.

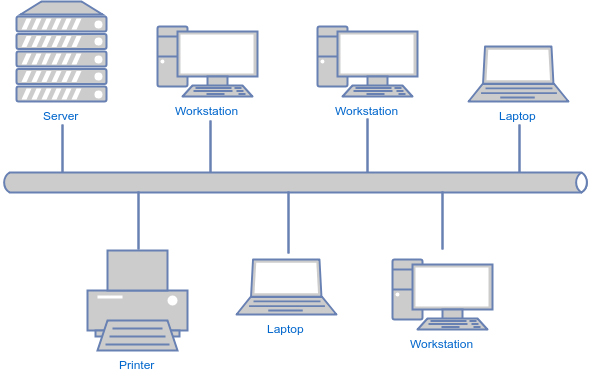

Bus

Another network topology is called bus where nodes are put together on a single line, bus topologies are usually used on LANs. Each node (or device) is connected to the wire which is called tapping, so it listens on packets. One of the disadvantages of a bus topology is that every bit of data that goes through the central wire can be seen by the other devices, which could mean that sensitive data that is sent to another PC and be viewed from a different device.

Ring

In a ring topology packets are sent around computers in a circle, each computer will look at each packet, and then it will decide if that packet is for their device or not and if it is not then it is passed onto the next device. A main part of a ring topology is that you do not have a central hub which means that one device is connected to 2 other devices which also provides a disadvantage, if one of the devices is to go down then it affects all the other devices and this is where there are two different types of ring. The different rings are single and dual, single uses half-duplex which means that one node is sent and then went it stops sending the other device sends a node whilst full-duplex is where they can both go the same way at the same time and this is where the difference if one system goes down it can still work with dual ring.

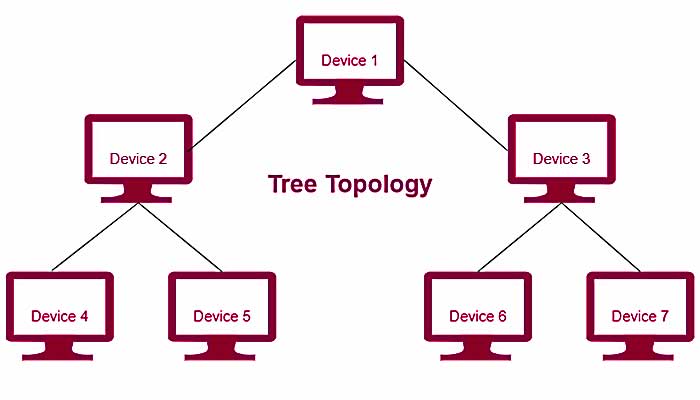

Tree

A tree topology is a hierarchical topology where multiple connected devices are arranged like tree branches with one central point being the tree itself. A tree topology is also known as a star bus topology, since it incorporates both a bus topology and a star topology. The bus main wire is considered a trunk which then links into star topologies. Advantages of the tree topology are that the arrangement means that you can add new devices easily on different star topologies. If there is an issue with one of the nodes, that doesn't mean that others will be affected. Tree topology is also highly secure. One of the issues with this network topology is that it is very difficult to configure which also means that it uses lots of wires which overall will affect how much it costs to start up and maintain.

Mesh

In a mesh topology, each device is connected to every other device in the network, which means that if one of the devices or connections were to go down then it would get to connect from a different device. This topology also provides good privacy and security. Some disadvantages include that it is extremely expensive due to how much wiring goes into it and with the amount of wires it's also very hard to install and configure meshes. An issue that could also arise is that if you have one connection and another that goes down then you can't tell if one goes down but if all the connections go down then it affects multiple devices and will need maintaining what then means that more cost and maintaining takes a long time.

Hybrid

A hybrid topology isn't a specific topology, but instead different topologies put together. Usually these topologies are put together for the advantages of an organisation for example in an office in one of the department's ring topology is employed and another star, topology is employed, connecting these topologies will end in Hybrid Topology (ring topology and star topology) [1]. The advantages of these is that combining multiple can then serve the purposes that you need, but with having multiple topologies can then lead to it being more expensive and it becomes more complex with the larger the network is.

IP and MAC addresses

What is an IP address

An IP address (IPv4) is a set of 4 numbered strings, each of these numbers are in the range of 0 and 255. An IP address is used so the internet knows where to send such services as emails. For everyday use your IP address request from other IP addresses things such as web pages to view.

An issue has come with IPv4 as in 2011 it ran out of addresses, this lead companies such as Amazon and Microsoft to go out and buy millions of addresses since there was now a shortage of them and this is where IPv6 came into play.

Compared to IPv4, IPv6 had a lot more IP addresses that could be distributed, IPv4 had around 4.3 billion whilst IPv6 has around 340,282,366,920,938,463,463,374,607,431,768,211,456. An issue with IPv6 is that it has had a slow implication within ISPs which has meant that lots of networks don't have IPv6 addresses.

What is Transport Gateway a MAC address

A MAC address is a unique 12-character alphanumeric word which is used to identify individual electronic devices. Every electronical device will have a MAC address.

An issues that has arisen with MAC addresses is how unique they were, this allowed for the NSA (national security agency) to track mobile device MAC address in cities [2]. This lead to randomised MAC addresses scanning which was applied to linux, apple, android and windows systems.

Static

Static IP addresses are IP addresses that do not change once that device has been assigned a IP address. Static IP addresses are usually used within servers

Dynamic

A dynamic IP address is the opposite of a static address, whilst a static stays the same from when it was set, dynamic IP addresses change periodically. Dynamic IPs are usually used for residential customers because if that customer is not using their service/address, it can be allocated to someone else, as opposed to static addresses which are allocated permanently to a single customer.

Source and Destination

Every packet must have a source address+port and destination address+port. The destination must be listening on that port and usually the source port is randomly chosen and doesn't need to be accessible from the outside. For example, you want to connect the website thisisawebsite.xyz (it doesn't connect through domains but instead the IP address assigned to the domain), you from your device make the source IP packet which will then be sent to thisisawebsite.xyz which is the destination of that packet which will then allow you to access that website.

Classes

There are 5 different network classes with a valid range of IP addresses. The value of the first octet value is what determines what class an IP address is and will configure the network size. IP addresses for the first 3 classes can be used as host addresses whilst the other 2 have specific purposes.

| Class | First oclet decimal | First oclet binary | IP range | Subnet mask | Host/Network | # of networks | Use |

|---|---|---|---|---|---|---|---|

| Class A | 0-127 | 0XXXXXXX | 0.0.0.0-127.255.255.255 | 255.0.0.0 | 16,444,214 | 126 | Government |

| Class B | 128-191 | 10xxxxxx | 128.0.0.0-191.255.255.255 | 255.255.0.0 | 65,534 | 16,382 | Medium Companies |

| Class C | 192-223 | 110xxxxx | 192.0.0.0-223.255.255.255 | 255.255.255.0 | 254 | 2,097,150 | Small companies/LAN |

| Class D | 224-239 | 1110xxxx | 224.0.0.0-239.255.255.255 | Reserved for multicasting | |||

| Class E | 240 | 1111xxxx | 240.0.0.0-255.255.255.255 | Experimental |

Subnet

Subnetworks are networks within another network which make the networks more efficient. It allows for network traffic to travel shorter distances without the need to pass through routers for that traffic to reach its destination.

When a network receives a bit of data from a different network then it will sort to make sure that the route for those packets are the most effective/fast. IPv4 addresses have two parts and depending on the class of that IP address will determine how long some of the parts are.

Reasons why subnetting is used is because for example in a class A IP address there can be millions of people connected to that network through their devices and could take a long time for data to transfer between two networks. This is why subnetting comes in handy: subnetting narrows down the IP address to usage within a range of devices [3].

The benefits of subnetting include:

- Reducing broadcast volume and thus network traffic

- Enabling work from home

- Allowing organisations to surpass LAN constraints such as maximum number of hosts [4]

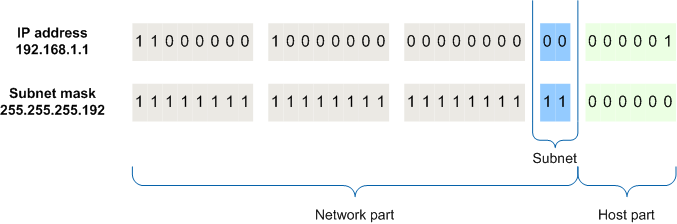

Subnet mask

A subnet mask splits an IP address into two with the host and network addresses. The subnet mask is a 32-bit number where if it is a host bit it is all 0's whilst if it is a network it is all 1s in a way that the subnet mask can then seperate the IP addresses between network and host.

Parts of an IP address

An IP address has 2 different parts, network ID and host ID. The network number is unique to every router and the part of an IP address is classified to the class of an IP address.

The network number is uniquely assigned on each individual network whilst the host ID is unique to the machine on that network which means for each device on the same network the network ID will be the same whilst it's the host ID that changes.

CIDR

Classless Inter-Domain Routing (CIDR) is a IP addressing scheme which replaces the older class system. A CIDR presents itself as an usual IP address but at the end of the IP address it has a / and a number that determines how many host can go on that network. The highest number that the CIDR can get to is 32 which allows for 1 host whilst the lowest you can go is 1 with 2,147,483,648 hosts. Below is a CIDR calculator.

Network components

Application Gateway

An application gateway is a type of firewall that allows application-level control over network traffic, they are used to deny access to recourses on private networks to untrusted users.

An application gateway analyses the incoming packets at an application level and then runs this through a proxy to allow for secure sessions. A session is started between the user and the proxy, then from the proxy to the server, the proxy then acts as a middle man between the user and the server.

What is good about this application gateway is that users are prevented from accessing internal network resources, although application gateways are usually slower.

Transport Gateway

Transport gateways connects two computers together with using transport protocols.

For example a computer using a TCP/IP protocol wants to talk to another protocol CSTP, transport gateways will copy packets from one connect to the other with them also being reformated.

Router

Routers are devices that connect 2 or more networks or subnetworks. The main functions of a router are managing traffic between different networks and allowing multiple devices on the same internet connection.

There are wired and wireless routers, wired routers use ethernet to connect to modems and uses separate cables to connect to each other devices in a network. A wireless router does the same but instead converts packets from binary code to radio signals and then broadcast those through antennas.

In addition with wired and wireless, there are others specialised types of routers to serve specific functions, these are Core, Edge & Virtual routers.

Core: Core routers are used within large businesses so it can transmit lots of data packets on a network.

Edge: Edge routers are similar to code routers but instead communicates between the core routers and external networks, it uses border gateway protocol to send and receive data from other LANs/WANs.

Virtual: Virtual routers is a piece of software which is similar to the hardware version of a router.

Bridges

Bridges are used to integrate multiple LANs together into one, overall a bridge should be able to improve how the network performs. Bridges are intelligent devices which allow only a select amount of packets to be sent and sends from one network node to another network node. Bridges are intelligent devices which allow only a select amount of packets to be sent and sends from one network node to another network node.

A bridge will perform the following features: Receive all packets from 2 or more LANs & create a table of addresses so it can see what packets are sent from what LAN.

Switch

A switch is a component in a network which forward and receives data packets , the difference between a switch and a router is that a switch can send a data packet to different components of a network not just a user's computer.

Switches have two different layers which they use. Layer 2 is the first layer and this links through destination MAC addresses and layer 3 instead uses the destination IP address. It specifies how data should be broken into packets, addressed, transmitted, router and received at a destination. TCP/IP does not need much management and has been designed so that failures are able to be recovered.

Firewall

Firewalls are the most effective way to block suspicious files/activity that is happening on your network from affecting your system, a way that you could use a firewall in linux is with ufw to block specific ports and for a credit card system you might want to block the http port because http is insecure and sends all data sent in an unencrypted way.

Software

Software firewalls are more used to secure a single desktop but can have multiple of them individually installed on a small companies systems or have the software installed on the server, usually compared to hardware firewalls software is easier to install and update compared to using a hardware firewall. It is best even if your corporation has a hardware firewall that you have a software firewall just in case you use something like a laptop and do your work on the move. Software firewalls usually are paid for with exceptions to such software like windows firewall which can work for your corporation.

Hardware

Hardware firewalls are a standalone device that is used to protect multiple different systems or an entire organisation. Routers are a type of hardware firewall but there are others that cost more money and are better for an organisation like a rack mount. A UTM firewall is an all in one firewall hardware that incorporates multiple different security methods like intrusion protection, anti-virus, anti-malware, spam, VPN and content filtering what together can be used for multiple different security features during attacks, it’s goal is to make managing and reporting easier for network managers. Most if not all hardware firewalls cost money because they are physical devices.

Anti-Virus

An anti-virus is a program that blocks any malware from getting in the system and can also be used for other things like scanning if you already have some malware on your system or detecting if a file that is going into the system is unsafe, if you are downloading a file like a .exe for windows then the ant-virus will prompt you that the .exe might be unsafe and become a piece of malware that will ruin your system. On linux systems there isn’t a need for anti-virus because of how the file permissions work and how to run as administrator but if i want to get rid of it for a network then i can add it to the /etc/hosts file that will allow for me to “block” specific websites and with block list i can block up-to 1k+ websites that are detected as malware.

Server

Server is a computing device which is used to store, process or manage network data, devices and systems. Organisations usually use servers for these reasons so that they can hold data about different users and they will hold things such as network storage which means that people can connect on any PC to their storage.

Servers have different types of server hardware which all have different uses compared to each other.

Tower: A tower server is a server which is built within an upright case. Usually used within smaller datacenters since they use up lots of physical space and they are also usually noisy. With tower servers there's also lots of cabling which can take time to properly cable manage whilst rackmount and blade both have features designed to manage cable management.

Rack: A rack server is a server which is placed within a downright horizontal rack rather than upright tower systems. It allows for more than 1 rack server to be installed in a single rack and these racks usually also accommodate other equipment like network switches, routers, battery backups, extra fans, patch panels (for better cable management), consoles (for controlling a server directly, it has a 1U device with a built-in screen, trackpad and keyboard). Usually rackmounts are used in industries such as broadcasting and radio.

Blade: A blade server is similar to a rack server but is optimised to have a smaller physical space and to use less energy. A blade server only contains I/O ports, CPU and memory and nothing much else which is useful for lots of processing power in a smaller space.

Client

A client is any device which is used to be the end point of the network and usually are the device which the network is trying to project a connection onto such as an internet connection.

Examples of clients include:

Wireless access point

Access Point is a device, typically mounted on a wall or ceiling, which creats a WLAN within small buildings or offices. Access points are connected to a router via an ethernet cable and then the other access point is used to connect to your device which is needed wi-fi.

Advantages of a AP are that it allows for more users to access a network since it has more user support than a wireless router. It also allows for a larger range on a network which is good within business. Issues with APs are that some of them are expensive and especially within enterprise usages of them they will cost more (sometimes for home networks, ISPs will provide them.)

Network media

Fiber

Fiber optic communication is used within telephone signals, internet connection and cable TV. When compared to copper, it is better on long-distance and also has a higher-bandwidth.

The cost to install fiber wires are quite large when you consider first the cost of the fiber cabling and then the cost of the labour to install the infrastructure, overall cost around £20,000 for a mile of 96-strand single-mode fiber optic wiring.

Copper

Copper wiring is used in a wide range of products, components and connections around the world. With technology improving lots of other competitors are now in the market such as fibre optic.

Copper wiring is one of the most conducting materials which is important because that means that it is used it can be reliable alongside electrical connections, it uses less insulation and armoring which allows for flexibility.

Wireless

Wireless networking is a network with a lack of wiring such as fiber optic or copper. Examples of wireless networks include things such as cell phone networks which use 4/5G connections so that someone can connect to the internet without the need for a personal network. Wireless networks started in Hawaii since they were seperated from the rest of the United States because of the pacific ocean so the telephone system and computer center were inadequate.

Two different wireless technologies are 2.4 and 5 GHZ connections. both 2.4 and 5 have their advantages and disadvantages so there are places where this will be used and the other won't. One important factor to determine this is how large the network range is, 2.4GHz has a larger range than 5 networks where 5GHz frequencies do not penentrate solid objects as well as 2.4 GHz. Usually for cost 5 GHz on it's own is not an option and usually get bundled with 2.4GHz which means that getting both 5.0 and 2.4 GHz is usually more expensive than getting just 2.4GHz.

Ethernet

Ethernet is a wired connection for LANs or WANs. Ethernet allows for the transmission of data so other devices are able to receive that infomation in a usually faster way than wireless. Issues with it being wired is that its longer distances it is harder to connect it to at least the router so ethernet connectors are used which can negate some of the positives.

Ethernet comes in 2 different types, classic ethernet and switched ethernet. Classic ethernet is the original version and ran at speeds of 3 to 10 Mbps whilst switched ethernet is what ethernet is now and can run at speeds of 100, 1000 and 10,000 Mbps which is usually called fast ethernet, gigabit ethernet and 10 gigabit ethernet. Only switched ethernet is used nowadays.

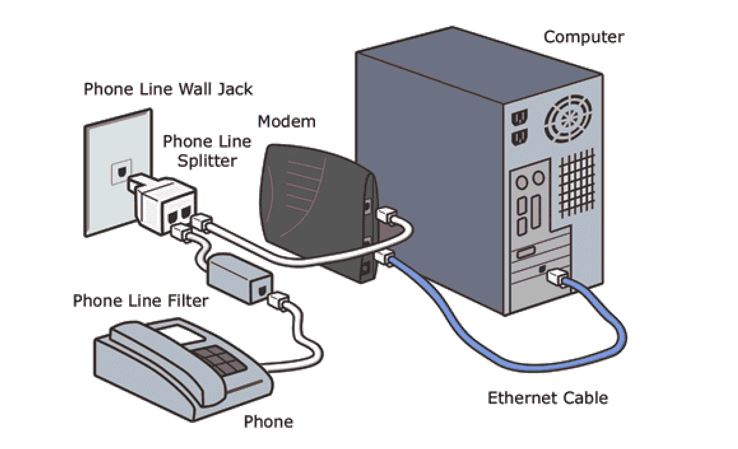

DSL

Another way that you can connect to your ISP to use the line in your household (can only work if the ISP is also your phone company). DSL started wth telephone companies realising they needed a more competitive product to challange other internet access.

DSL is known for having perticually slow speeds, this is because telephones lines were created to only support a duplex communication system where you can talk to someone else who is connected but it was not invented to handle data that may be carried through the internet.

Wi-Fi

Wi-Fi is a wireless network that allows for the connection to LAN networks to give devices internet connections. Wi-Fi is the most popular computer network in the world which allows an arrange of different devices to connect to the internet.

Wireless internet connections had been envisioned in two different ways, one with a base station which would be connected to a wired network (similar to how a router operates) and the other where devices would connect with each other to provide an internet connection.

It was determined that Wi-Fi should run similar with ethernet (which was what was being used at the time in LANs) so ethernet and Wi-Fi act the same way, they send packets the same way as each other but instead of being wired like ethernet it is wireless.

Bluetooth

The original idea for Bluetooth was to connect devices without a wire and this meant that people would be able to connect their mobile devices to other devices without the need for a wire.

Bluetooth has it's issues, it does not operate well if one device has a wall between the other device, is has a relatively small connection and can have outside signal interference.

Networking evolution

BYOD

Some threats could come from unsafe practices, bringing your own device could be a threat to the whole IT system at a corporation because these devices are normally not up-to the company standard for security and monitoring for the safety of the data and other corporations employees. BYOD wouldn’t be able to follow the same standard that the machines already at the workplace would for example most of the devices at the corporation would need an administrator password to be able to change things on that device but BYOD's don’t need these since the person bringing it in are the administrators of that system. There’s also an issue that they might have their own data on that device instead of using the company internet that could restrict certain things like adware or other websites that might be a threat to the devices or could be used to damage the internet system that is already in place at the corporation.

Virtualisation

Virtualisation uses a piece of software to create a layer over a computers hardware and divides it into multiple other computers usually called virtual machines. Every virtual machine runs on an operating system and behaves the same as a personal computer.

Virtual machines are enviroments to simulate the hardware/software of a physical computer. It has features such as snapshots which allows for a history to be saved and for that time in history to be chosen at any time and this is important because of what virtual machines are used for where you may be experimenting a lot with different types of software and the effects that it might have on the computer and if anything ever goes wrong with that piece of software then the virtual machine is a good way to be able to go back to a working state of the virtual machine and test again or leave it.

There are different types of virtualisation, these include Desktop virtualisation, Network virtualisation, Storage virtualisation, Application virtualisation & Linux virtualisation (KVM).

Desktop virtualisation is similar to the one discussed a few paragraphs up, it allows you to run multiple desktop operating systems.

Network virtualisation is a piece of software that creates a view of how a network would work and an admin can manage that network with it having the different hardware elements such as switches, routers & connections where the network admin is able to configure the different parts of the network before creating that network in real life.

Storage virtualisation allows for all storage on a network weather that be storage that could be on a network or storage that is on individual PCs to be accessed and modified as if it was a storage component for a single device. All the storage goes into one block which can then be configured and assigned to multiple PCs

Application virtualisation runs software without having to install it directly. The difference between this and desktop virtualisation is that it only runs the applications in a virtual environment and not the OS. There are different types of application virtualisation including local which means simply it runs on the device which you are using, streaming which streams the application from a external server to a user's device and server-based which runs entirely on a server.

KVM is a kernal based virtual machine which is built into the linux kernal which allows the user to turn linux into a hypervisor which is software that allows for virtual machines to be created and run. KVM is useful since with it being inbuilt into the linux kernal that means that it gets updated at the same time the kernal does which means that it is always up-to date with any new linux features and fixes.

Remote working

Remote working refers mostly to what the title suggest, working from home or away from the usual office life that is resigned for people who work mostly in computing. Since the rise of the internet there has been a small increase in the amount of work that can be done at home but now has had a massive boom due to some circumstances.

During the Coronavirus lockdowns of early 2020 and 2021 there has been a massive rise in remote working, due to the fact that employers were unable to meet face to face without the risk of a virus spreading this lead to lots of office jobs moving from instead of staying in the office to instead take the work home.

Because of work being taken online this meant that certain software had to be used to allow for things such as accessing files, communicating with teams and more. Some of the most popular software that came around this time was Zoom, Google chat & Microsoft teams.

In the UK, 30% of the UK workforce is working remotely at least once a week [5] and 1 in 5 Brits want to work full-time remotely [6]

Cloud

Cloud data storing is where data is stored in logical pools in a remote data center far away from normal places, the deal of cloud stored data is that it wants to make the data accessible by anyone who can get into a cloud server account meaning that it can be used anywhere around the world what makes it useful because then data can be accessed from a workplace and maybe if you wanted to go home and do work there. An example of a cloud based storage would be Google drive where data can be stored there and be accessed by anyone with that log-in to the account, you can also share this with people who you have the email of or using a link where they can then access the data as well, this is useful for file movement.

Cloud vs On-Premise

On-premise data storing is where the corporation/company/school has a collection of data storage devices (HDD/SSD) where data is stored that should not be leaving the premises. These are normally stored near the premise where the data is being stored and can only be accessed by people in the corporation and can be used to store more precious data that might not want to be put online. Differences compared to cloud based storage is that it isn’t online and you know where your data is whilst on cloud you have no idea where that data is being stored (this is for safety reasons). For an example of what may use cloud based computing, the school uses google so that it is easier to share work with teachers and the teachers can share class work other things like classrooms instead of individually sending everyone the work or making them all watch the board what may be hard for some students who might want to revise on that lesson. Students still have a desktop with space but it isn't much and it's just for saving things they may have to save with applications they can't use with google (for example, virtual box)

Advantages of Cloud based storage would be:

- Data can be access anywhere around the world if you have a log in to the account

- Data is easier shared across other people on the network

- It is easier to set-up with only having to buy an account and then you can start storing data

- The corporations that advertise cloud based storage are safe and secure

- All data sent is encrypted

- Easy to use for backing up data

Disadvantages of Cloud based storage would be:

- You don’t know where your data is at all points

- Accounts could be hacked and data is then spread

- Server rooms, whilst hidden, could be destroyed

- Downtime

- This requires an internet connection to get online and the speeds are based on your internet connection and not the write speeds of a hard drive

- It is harder to migrate data from one place to another.

Advantages of On-premise data storing storage would be:

- Easier to store data and find that data

- Whilst at first glance cloud storage is cheaper, overtime buying hard drives psychically will save cost over the time of a year (compared, mega.nz is £17 a month for 8TB’s whilst an 8TB hard drive is £170, 170/17=10 so after 10 months it would be more cost effective to buy a hard drive).

- Better read and write speeds compared to an internet based speed transfer

- It is accessible without an internet connection

- This last longer since it’s more likely that an issue with the cloud based storage would happen before a hard drive failed

- You have full control over the storage system

- You can use a backup system like 3-2-1 (3 copies, 2 on-site, 1, off-site) using RAID 5 so that you never know that it would be very hard to lose data.

Disadvantages of On-premise data storing storage would be:

- Whilst you have full control it is harder to keep the system up and running without issues and could take a while to get used to

- Whilst hard drives themselves might not be as expensive if you want the best speeds then you’re going to want to have solid state drives instead which will take you back a lot of money.

- If a natural disaster was to happen then all of that storage could be lost and with so much data it will be hard to back up

- Whilst they might not be attacked it would be extremely easy to steal data from them compared to stealing from a cloud based service that has lots of encryption.

- Failed hard drives can happen

What is best?

In a financial IT system, you will want to use a cloud based system the least you can, transferring financial data through the internet is extremely insecure way since there isn’t a 100% chance that those cloud based storage systems will not be taking the data for themself and not using them at all will remove the risk of this, it’s also possible that your account is hacked and the hackers then have access to all the data that was in the cloud without even notifying you. Making sure that financial records are on-premise and offline makes sure that if hackers were to get into the system it would be near impossible for them to get a connection to the sensitive data that would be held at a financial institution.

Different versions of web (1.0, 2.0 & 3.0)

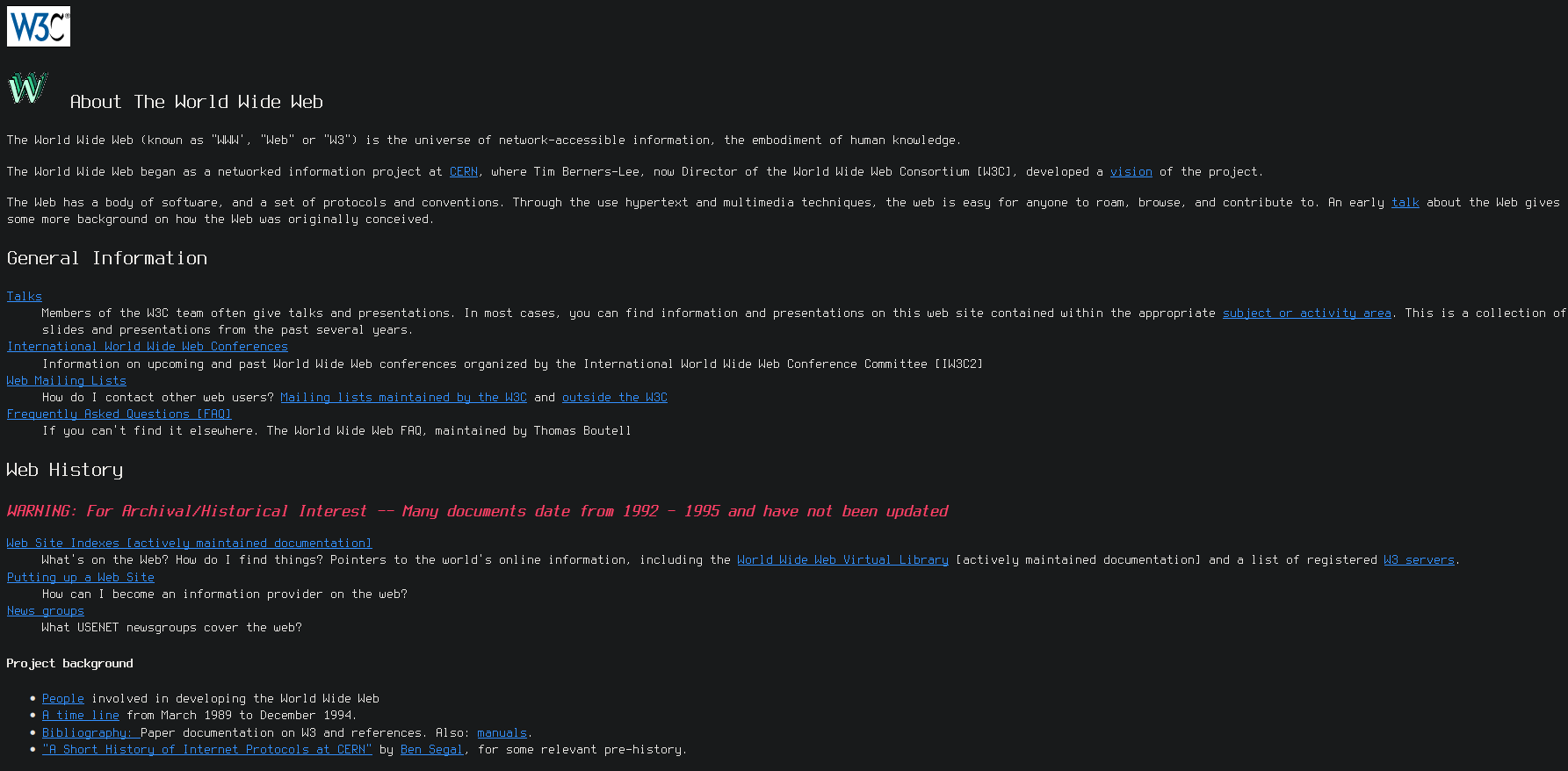

web 1.0

Web 1.0 was the first standardisation of the internet as we know it, it lasted from the late 90's and the early 00's and would consist of static web desings without much interactivity on the websites and also started the trend of homepages where people would have their own websites to cite off information about themselves and contacts where they could be contacted from.

An example of a web 1.0 website

web 2.0

Web 2.0 is the second version of the World Wide Web and is associated with dynamic, interactive websites. It is characterized by user interaction, rich content, and the ability to connect people and data. During this time, websites were created using a variety of technologies such as AJAX, Flash, and HTML5, and were often hosted on multiple servers. Navigation was done via rich menus and search, and websites were designed to be interacted with.

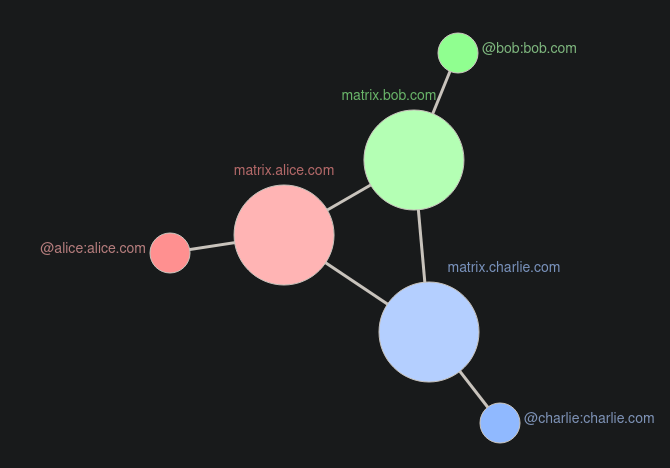

web 3.0

Web 3.0 is the third version of the World Wide Web and is associated with intelligent, personalized websites. It is characterized by artificial intelligence, semantic web technologies, and the ability to provide a personalized experience for the user. During this time, websites are created using a variety of technologies such as AI, natural language processing, and machine learning, and are hosted on multiple servers. Navigation is done via personalized menus and search, and websites are designed to be interacted with in a conversational manner.

web 2.0 vs 3.0

Web 3.0 is the predecessor of web 2.0 that uses decentralisation compared to web 2.0’s centralised. The differences between this is that centralised networks usually all work of 1 or more servers owned by the person giving the service i.e. twitter, facebook or youtube whilst decentralisation is instead multiple different people hosting their own version of a protocol i.e. mastodon, peertube or matrix.

Web 3.0 offers a way of people hosting their own services where web 2.0 is a centralisation of everyone connected to the same service so if that service was to go down in web 2.0 it goes down for everyone whilst with web 3.0 if one goes down it’s only that one that can’t be used whilst other ones can be used to access the site.

Another aspect of differences between web 2.0 and 3.0 is currency that is used on both, within web 2.0 things such as bank transfer or web applications like paypal were used a lot to transfer money between different people but for the most point this was centralised within a bank whilst web 3.0 promotes the usage of crypto currencies which use blockchain to transfer tokens between other people's wallets, issues with this could be there are centralisation with websites like coinbase that will hold your tokens which goes against the principles of web 3.0 and environmental issues like the mining of bitcoin needing lots of GPU power to get a small amount of that currency and certain transactions use lots of power which has had as much carbon footprint as greece [7]. This has also brought its own scams around which are as popular as paypal or bank scams usually trying to get into someone's cryptocurrency wallet.

Client-server models

Thin clients

A thin client is a computing device which runs from resources that are on a central server compared to a localised hard drive (tick client model). A thin client model connects to a remote server where it will get applications and data. The usages of this are in an organisation where a central server is used to host software which every desktop can then use instead of simply having to install the software on each device which would take longer. A thin client cost less due to the fact that you wouldn't need to buy physical harddrives but overtime with a subscription service for space may become more expensive. They are usually a lot easier to manage due to how centralised they are. An issue that could arise with thin client models are that it requires an internet connection to usually connect to the servers and if you do not have an internet connection this could mean that you are unable to use the device until there is an internet connection.

Thick clients

The thick client model is where most resources that the computer use are installed locally compared to thin client which distributes across a network. A thick client is usually any device which has a storage device such as a HDD or an SSD. Thick clients are a lot more customisable to the specific user and they also have more control over what is downloaded locally on their device. Another important part is that thick client models also work offline with applications still working even if the device is offline. Another advantage over thick-client models are that you own the storage device and can access them, edit files and delete whatever you want which means that you have more freedom on your own system but this comes at the cost of having to purchase storage devices which can cost more with the amount of storage that you need. One issue that could arise with Thick-clients are that they would take longer to maintain in a business since each needs to be individually maintained.

Network models

TCP/IP

TCP/IP stands for Transmission Control Protocol/Internet Protocol which is a suite of communication protocol which are used to connect network devices into the internet. TCP and IP are the two main protocols of the suite.

TCP/IP specifies how data should be broken into packets, addressed, transmitted, router and received at a destination. TCP/IP does not need much management and has been designed so that failures are able to be recovered.

The two main protocols of the suite are TCP and IP.

TCP is used to give rules to how applications create the channels for communication across an entire network and manages making data into smaller poackets before they are sent off.

IP is used to see where the final route of the packet is to make sure that it has the correct destination where each device will check an IP address to see where the data is being sent to.

Other protocols in TCP/IP include:

HTTP which handles communication between web servsers and web browsers.

HTTPS which works the same as HTTP but adds a secure communication between the two.

FTP which handles file transfers between computers.

OSI

The OSI (Open systems interconnecton) model has 7 different layers that computers use to communicate with each other across a network. It is a standardisation which has been used since the early 1980s.

The 7 layers include:

Application Layer: The application layer is a layer of rules that only software which the end-users use are defined under and it provides protocols where that software such as web browsers are email clients and send and receive data and ways to present it to the users. This includes HTTP, FTP, POP, STMP and DNS.

Presentation Layer: This layer prepares data for the application layer and will encode, encrypt and compress the data so it can be received correctly when given to the application layer.

Session Layer: The session layer creates sessions which act as communication between different devices and this layer opens the session, makes sure it stays open whilst the data is being moved between devices and closes the session when the communication ends.

Transport Layer: The transport layer takes the data that was transfered and breaks it into "segments". It then reassembles these segments turning it back into data which can then be sent to the session layer. It also includes error handlings to make sure that if data was incorrectly received that it can request again.

Network Layer: The network layer has two main functions. One of these functions is breaking up the segments into packets and then reassembling those packets on the receiving end. The second function is making sure that packets have the best path across physical networks (take the fastest routes), this uses IP addresses to see the destination of packets.

Data Link Layer: The data link layer creates and removes connections between two connected network nodes. This layer has two parts which are the logical link control which identifies network protocols used, performs error checking and synchronies frames. The other part of the layer is media access control which uses MAC addresses to connect devices and define permissions.

Physical Layer: The physical layer refers to physical cables or wireless connections between network nodes. It's just is to define the connector that is connecting to the device and transmit the raw data between them.

Advantages of the OSI model for users include; understands communication between different components, performs troubleshooting and can determine hardware/software on a network.

Advantages of the OSI model for manfactures include; allows software to be created to communicate with any product regardless of vender, what parts of a network that the manfactures product would work with and tells manfactures where their product is on the network layer.

UDP

UDP (User Datagram Protocol) is an alternative that commonly used tcp. unlike tcp, udp is a protocol that is unreliable, connectionless and message-oriented. this means that udp is usually used within video/audio calls where 2 users need to hear each other as fast as possible due to it being fast and efficient with small amounts of data. other uses of udp include online gaming, audio/video streaming or voip.

Services

DHCP

DHCP (Dynamic Host Configuration Protocol) is a network protocol that assigns IP addresses and can configure infomation dynamically to devices on the network. When a server receives a request, it allocates a free IP address and sends it to the DHCP OFFER packet.

DHCP is very important in a network as a host cannot send and receive data without an IP address, and manual configuration is tedious, especially for a lot of devices.

DNS

DNS (Domain Name System) is a system where it translates a domain name into an IP address. An example of how it works is a domain name such as youtube.com is infact a simple IP address that is linked to a web server but since remebering IP addresses is difficult it's a lot easier to remember a domain name which instead points in the direction of an IP address, so now instead of having to remember IP addresses such as 192.168.1.1 or more complex 2400:cb00:2048:1::c629:d7a2 instead you just need to remember a much more simplified domain name.

HTTP

HTTP (Hypertext Transfer Protocol) is a network protocol which web browsers and servers use to communicate with each other. The protocol appears in the URL of websites (http://google.com). The way that the HTTP protocol works is pretty simple, it is used so that end users can request files on a server for those files to be displayed on a web browser.

HTTP is an application layer protocol built into the TCP/IP protocol. HTTP communicate through messages with the three main ones being GET, POST and HEAD. GET are messages that only have a URL and sends to web page to the browser. POST gets any additional data parameters in the request message instead of ending them to the end. HEAD works similar to GET but only sends back the header information within HTML.

:max_bytes(150000):strip_icc()

:format(webp)/HTTP_RequestMessageExample-5c82b349c9e77c0001a67620.png)

https uses http and adds security onto it (hence the name), http stands for Hypertext Transfer Protocol whilst https stands for Hypertext Transfer Protocol secure. The differences between these two are that http was used lots in the past with older websites until 2014 where google had recommended that people should use https instead since it was more secure and encrypted your data so that phishing sites would be outdated and easy to spot. If you are making a company that is being used for financial transactions that means that you will be handling with people putting in debit card information and if a hacker was able to get that then it could mean that your service will no longer be used by anyone who wants to keep their debit card credentials.

RADIUS

The RADIUS (Remote Authentication Dial-In User Service) protocol is a client-server networking protocol which facilitates the communication between a server and individual users who may want to interact or gain access into that server. RADIUS allows users to remote access into servers to communicate with the central server.

Users connect to a RADIUS client and then this is verified with the RADIUS authentication server where it will verify information such as a username, password and an IP address.

Remote access

Remote access is a piece of software (or operating system) feature that allows PC desktop environments to be run from a remote position usually off of another PC. The main uses of remote access come in servers where with the server IP address you are able to access the server even if you are not physically able to access the server.

Two main protocols that are used for remote access are SSH and telnet. There isn't many differences between telnet and SSH since they mostly function the same but telnet is a lot older protocol so has poor security for example there is a lack of encryption which means that all data that is being sent is in plain text which can be a massive issue if you are sending things such as passwords and can be a big vulnerability to man-in-the-middle attacks.

The other remote access protocol is SSH which again is similar to telnet but has a lot more security with it with features such as encryption which makes sure that all data that is sent through SSH is not plain text unlike telnet. Better authentication for example can create SSH keys which will only accept devices that have that key instead of the usual password authentication.

Bibliography

-

Advantages and disadvantages of hybrid topology (2022) GeeksforGeeks. Avaliable at: https://geeksforgeeks.org/advantages-and-disadvantages-of-hybrid-topology (Accessed: November 14, 2022).

-

Bamford, J. (2020) Edward Snowden: The untold story, Wired. Conde Nast. Available at: https://www.wired.com/2014/08/edward-snowden (Accessed: December 12, 2022). He would also begin to appreciate the enormous scope of the NSA’s surveillance capabilities, an ability to map the movement of everyone in a city by monitoring their MAC address, a unique identifier emitted by every cell phone, computer, and other electronic device.

-

What is a subnet? | how subnetting works | cloudflare (no date). Cloudflare. Available at: https://www.cloudflare.com/learning/network-layer/what-is-a-subnet (Accessed: November 21, 2022). This is why subnetting comes in handy: subnetting narrows down the IP address to usage within a range of devices..

-

What is subnet mask? definition & faqs (2020) Avi Networks. Available at: https://avinetworks.com/glossary/subnet-mask (Accessed: November 21, 2022).

-

Remote Working Statistics UK (2022) StandOut CV. Available at: https://standout-cv.com/remote-working-statistics-uk (Accessed: January 17, 2023). 30% of the UK workforce is working remotely at least once a week in 2022.

-

Remote Working Statistics UK (2022) StandOut CV. Available at: https://standout-cv.com/remote-working-statistics-uk (Accessed: January 17, 2023). 1 in 5 Brits want to work full-time remotely.

-

Digiconomist (2022) Bitcoin Energy Consumption Index - Digiconomist. [online] Available at: https://digiconomist.net/bitcoin-energy-consumption (Accessed: 13 September, 2022).